Introduction

I needed to test my AI Project Guide's ability to accelerate development of something not completely trivial, but that I could do quickly. I wanted something fun to do on a Friday night. Creating an infinite-terrain demo using a cosine surface in Three.js checked all the boxes, hopefully without becoming too involved.

Why the "cosine surface" concept? Years ago I learned enough math and BASIC programming to create one on an early Apple computer. It required minutes to draw a single frame in 280x192 resolution. What would it be like to create one now?

What is a Cosine Surface Anyway?

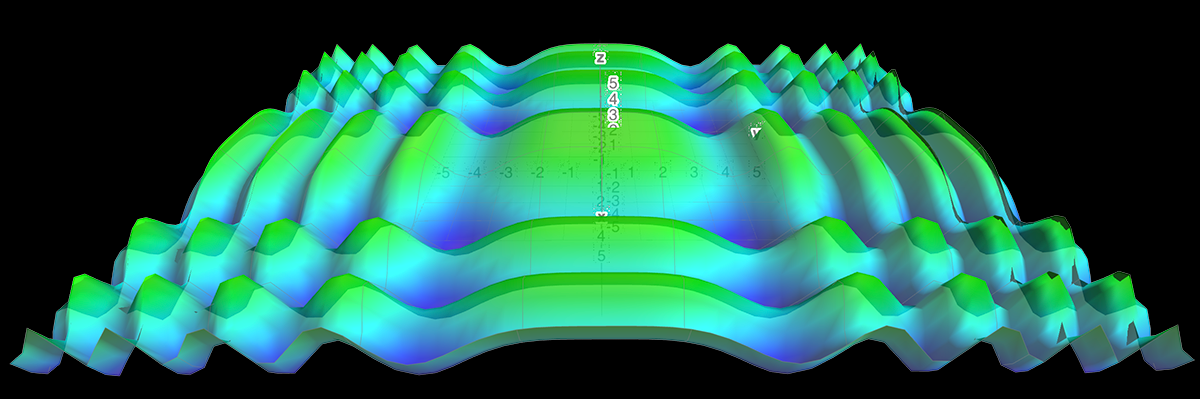

A "cosine surface" can be created by using a function of two variables, where the function value at the current x, z positions determine the height of the surface at that point. They can be relatively simple, for example y = cos(x) + cos(z), or as intricate as you'd like to make them.

Scaling the components will create either larger or faster terrain effects in that direction. For example, y = x + 2cos(z) will have larger latitudinal ridges or waves, while increasing x would cause larger longitudinal variation.

Similarly, increasing the values inside the parentheses will increase the frequency of these variations in their respective direction.

The surface produced using z = cos(0.2x^2) + cos(0.2y^2)

The surface produced using z = cos(0.2x^2) + cos(0.2y^2)

Design & Initial Construction

I'll use my manta-templates Next.js template as a starting point, and hopefully stuff this whole thing into a reusable Three.js card. I'll need it to run smoothly and look nice on devices from desktop to mobiles, within reason. I want the terrain generation itself to at least be configurable in code, even if I won't provide a UI for it right now.

Configuration doesn't need anything hugely sophisticated, but at minimum I want the ability to affect amplitude and frequency of the terrain features, and hopefully have them vary over time.

UI needs are minimal -- just display the surface in any reasonable app as a container.

Tooling

I decided to use the Next.js template from manta-templates, not because this app really needs Next.js, but because it provides an easily workable start, including some basic Three.js support baked in. I'll use Cursor for development, and pick models as needed from those available.

Initial Development

Using my AI Project Guide mainly Claude 4 Sonnet and sometimes Gemini 2.5 Pro in Cursor, I quickly arrived at a coherent concept, spec, and initial task list. The template provided a good starting point, and I could focus the AI on issues specific to the task at hand -- displaying a reasonable surface with smooth animation.

Challenges and AI Pair Programming

From the initial build I could see numerous items that needed addressing. The generation ran fairly well, but contained gaps and overlaps, and worse, started displaying with missing tiles after running for a short while. Working with AI to address these and produce a polished demo became one of the most interesting aspects of the project.

World Comprehension

The initial version did at least display something. It rendered a surface of sorts, though it appeared as a chaotic mess. Rotated incorrectly about at least one axis, it appeared as a bumpy jumble of lines. At least they rendered at 60+ fps.

Coordinate Confusion

Untangling this revealed that even though Claude seemed to understand the coordinate axis system, it would repeatedly become confused, using vague words like "ahead" or "behind" and misinterpreting them, causing it to reverse signs and leading to scrambled geometry. In particular it kept deciding that "in the direction of negative z" meant making the z-coordinate less negative, when the opposite was correct. I straightened it out, but a clear rules anchor would have avoided bugs and probably saved an hour.

With coordinates finally behaving correctly, the next challenge emerged: determining how much terrain to actually render. Too little and you'd see gaps at the edges; too much and you'd waste precious GPU cycles calculating geometry that would never be visible.

Update 2025-08-07: ChatGPT 5 released today. I wanted to make one more update here — I probably could have simplified this debugging by not letting the AI use a rotated coordinate system, but I wanted to confirm something first. I asked GPT-5 to analyze the coordinate system in use and it nailed it on the first try, missing no details. A huge upgrade that enables the next level of collaboration!

Field of View

Field of view, often expressed in degrees, represents the width of my viewing angle. As I look further ahead, my viewable width expands according to this field of view. The same normally would be true of height, and that is where the confusion came in.

I added documents specifically on Three.js, and often make the context7 MCP server available for the Agent to use in retrieving additional documentation. In the case of Three.js, the following example appears in its documentation:

How can scene scale be preserved on resize? We want all objects, regardless of their distance from the camera, to appear the same size, even as the window is resized. The key equation to solving this is this formula for the visible height at a given distance:

visible_height = 2 * Math.tan( ( Math.PI / 180 ) * camera.fov / 2 ) * distance_from_camera;While this is useful, it confused the LLMs (all of them) into thinking that our visible dimension was dependent on the height of our camera, not the distance from it. In our case, we can't look past the far clip plane, assuming our gaze isn't straight down, can approximate the distance as the distance to the far clip plane.

I made this change for Claude, but it continued to re-break it. When I also told it that unless we were looking straight ahead, we were looking at a point below (or above) ourselves, and that this must be greater than the level distance.

Attempts to illustrate this failed until I phrased it much like a simple mathematical proof about camera height and viewing distance. Sadly, I had the perfect statement here but lost it in Cursor's conversation history - a common hazard of AI development. Remember to save important prompt snippets! Once I established that relationship, no more errors, no more breaking the code.

Replacing Magic Numbers with Calculations

One of the more interesting aspects I found while reviewing code was the use of "magic numbers" that actually worked pretty well, but felt too much like luck. The system needed to determine how many terrain tiles to render based on viewport width, and the working formula was suspiciously simple: viewportWidth / 100.

The investigation revealed the real geometry: our camera sits at height 3,600 units, looking down toward the horizon. This creates more complex 3D geometry than simple ground-level calculations:

- If camera were level: Distance = 28,000 units, view width = 32,312 units

- With elevated camera: Actual distance = √(28,000² + 3,600²) = 28,230 units, view width = 32,577 units

- Corrected tiles needed: 32,577 ÷ 2048 = 16.9 ≈ 17 tiles

The /100 "magic number" was accidentally approximating the visible tile count. It worked, but it was mathematically imprecise and inefficient on mobile devices. Through several iterations, we replaced the magic number with proper aspect ratio calculations that work correctly across all resolutions.

Claude handled the trigonometry exactly as I specified, but the geometric insight came from questioning why the "lucky solution" actually worked. In the end, Claude managed to "simplify" and yet again remove the more accurate solution, treating the distance to far clip plane as perpendicular distance. Accuracy is considerably better than "magic number" but less than it should be. I'll put it back when I work with this code again.

Tile Recycling Gaps and Ragged Edges

The tiling and recycling design was Claude's. We wanted infinite terrain, and of course we don't have infinite memory, so we'd need to manage some manner of buffering, recycling, and cleanup. As it turned out, this was closely connected to the missing tiles.

The animation ran beautifully -- for about 8 seconds. Some initial work crushed a small collection of mostly-straightforward bugs like 'off-by-one' and similar errors, bringing the smooth run up to about 15 seconds. From there it began to look dilapidated with missing tiles appearing, then being redrawn correctly, or just being "eaten away" as was the case at the edges.

A bit more bug bashing brought the solid runtime to 55 seconds. The true issue was incorrect geometry regeneration of the recycled tiles. It "usually worked" but failed way too often for me to be satisfied with it. It was Claude who discovered the source, as it volunteered this (paraphrased):

We have a field 64 tiles deep (64 tiles in z-direction) and each tile is 2048 units wide (our terrainScale variable). Claude calculated this timeline:

World depth: 64 tiles × 2048 units/tile = 131,072 units

Camera speed: -2400 units/second along z-axis

Time to traverse: 131,072 units ÷ 2400 units/sec ≈ 54.6 seconds

I temporarily cut the depth to 32 tiles, and the successful runtime dropped to right around 27 seconds, exactly as it should if the recycled tiles were indeed the issue:

Reduced world depth: 32 tiles × 2048 units/tile = 65,536 units

Reduced traverse time: 65,536 units ÷ 2400 units/sec ≈ 27.3 seconds

At this point we are displaying and using only recycled tiles. This with some additional debugging led to the discovery that I wasn't correctly regenerating geometry for these tiles, even though other aspects of the refactoring were functioning correctly.

It was this process of mutual explanation and discovery, rather than just saying 'AI do this' and getting frustrated when it can't, that elevated this project from a quick demo to a true learning experience.

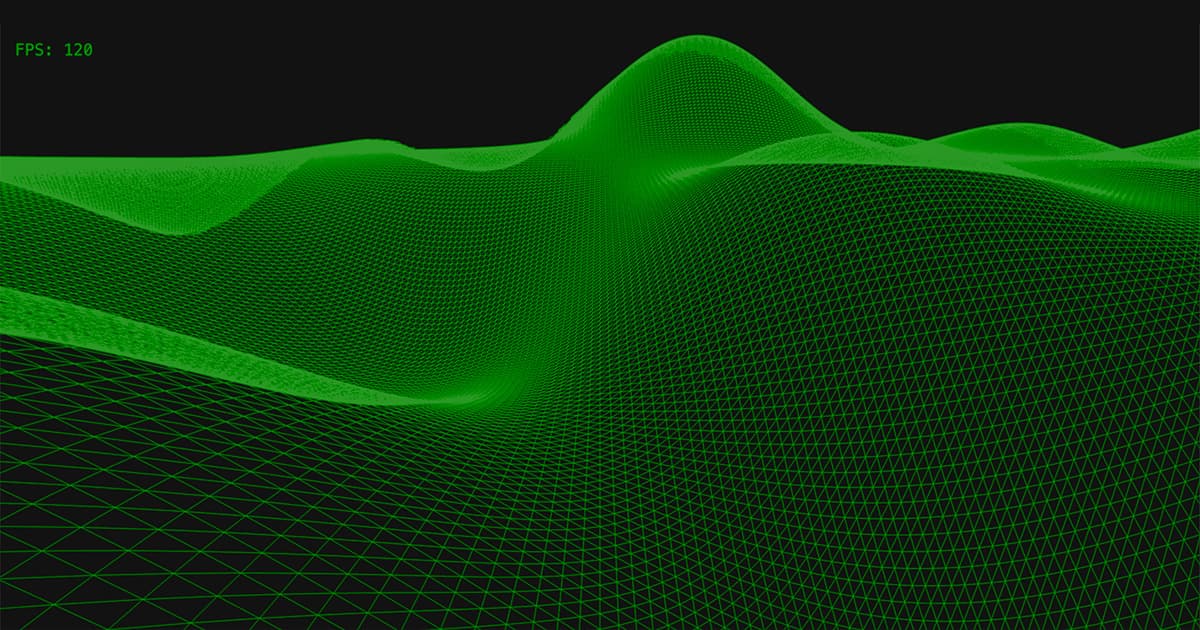

The Result

Time Spent

I spent about twelve hours on this, admittedly not in any hurry, and an additional couple hours Sunday morning mostly just playing with the surface parameters.

Of those twelve, about 5 were the drafting the initial concept, creating and deploying a functional and smoothly-running app. Bug fixes other than the recycling issue ate a couple hours, one avoidable with better rules.

Spent a while -- 5-6 hours on the recycling bug and its ramifications, including reviewing and modifying bits of code, often with Cursor's agent in "Ask" mode. Could I have made it faster myself? If I had done something very similar before, probably. But then I'd just update the respective tool guide in AI Project Guide, improve the AI's level, and go on to something bigger.

Could integration of testing into the project guide and therefore into the process save time and improve quality by reducing defects? Absolutely.

API Costs

I spent about $25 in Claude API credits. Gemini 2.5 seemed capable as well, but it was having issues with Cursor (at least for me) so I just used Claude. I feel like much of the cost was in resolving the tiling bug, but I don't know for sure.

Code Quality - What I Wanted vs What I Got

I got mostly what I wanted. A few extra complications here, some convoluted math there, but overall, the result completely meets the design objectives. There are some "magic" modifiers still in the CosineTerrainCard.tsx. The height calculation and its amplitude variance isn't quite what I asked for, and could be simplified.

I've reviewed much of the code, but considerably less for perfection than avoiding just blatant bad practices and anti-patterns. There's at least one 'dangerouslySetInnerHTML' that I'd like to get rid of. I plan to apply my automated code review process to this and see if AI can accelerate its improvement.

Conclusion

This project exceeded expectations. What started as a Friday night experiment became a demonstration of what systematic AI collaboration can achieve when applied to more intricate technical problems.

The technical results speak for themselves: smooth infinite terrain at 120 FPS, mathematically correct geometry, and mobile optimization. But the real value was in the process — discovering how structured human-AI collaboration can tackle complex debugging challenges more efficiently than either party could alone.

The $25 API cost and 12 hours of development time were well worth it and bought more than a bit of cheap entertainment plus a probably-unnecessary demo. The learning experience and validation of AI Project Guide methodology's ability to accelerate precise technical work without sacrificing quality or mathematical rigor made this genuinely valuable.

I could have easily shipped this with ragged edges, a few missing tiles, and magic numbers — and for a quick prototype to validate the concept, that would have been perfectly fine. But once you know the idea works, it's worthwhile to create a finished product that you'd actually want to use or show to others.

Most importantly, this project validates the collaborative approach I've been developing. This wasn't AI generating code or human writing prompts — it was genuine pair programming where each participant brought irreplaceable strengths to complex problem-solving.

The infinite cosine surface runs smoothly, the math is sound, and the code is ready for whatever comes next. The methodology that made it possible is what I'm most excited about.